Machine learning: Data, data everywhere, but not enough to think

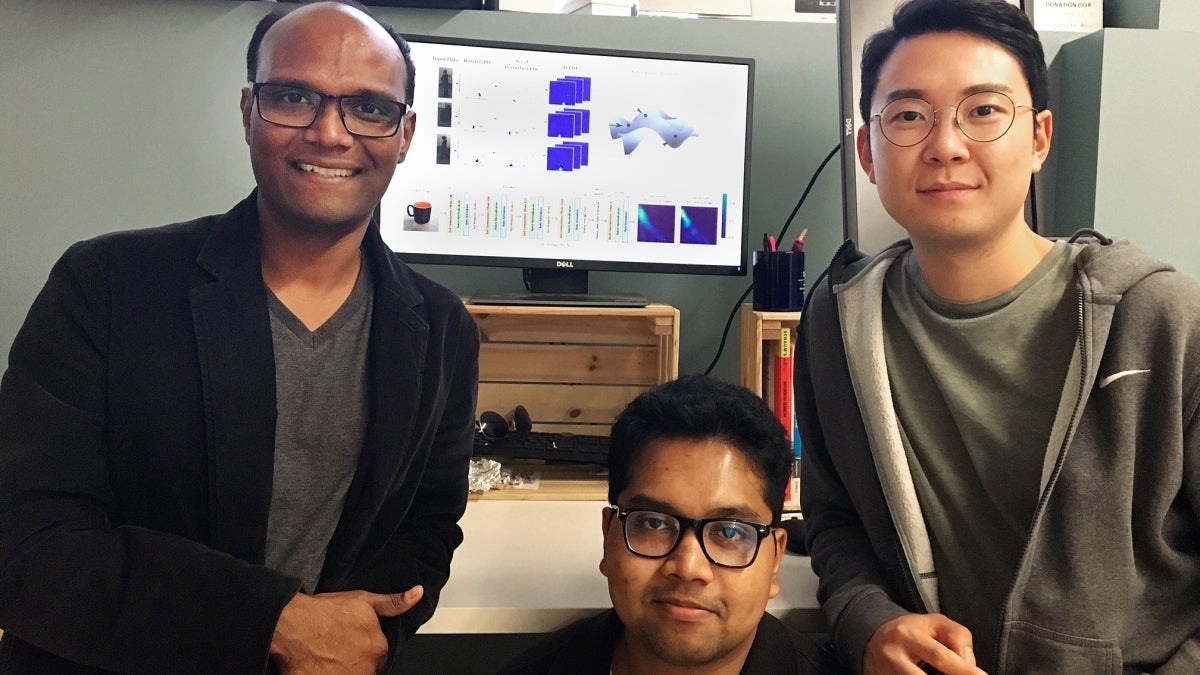

Pavan Turaga (left) and his research team are applying geometry and topology to machine learning models to make them more efficient and less reliant on large quantities of data. Photo courtesy of Pavan Turaga

Imagine a smartphone app that can identify whether your pet is a cat or dog based off a photo. How does it know? Enter machine learning and an unimaginable amount of data.

But teaching a machine is more complicated than just feeding it data, says Pavan Turaga, an associate professor in Arizona State University's School of Electrical, Computer and Energy Engineering and interim director of the School of Arts, Media and Engineering, who is trying to find ways to revolutionize the way machines learn about the world.

“The work that I’m doing is leaning toward the theoretical aspects of machine learning,” Turaga said. “In current machine learning, the basic assumption is that you have these algorithms that can try to solve problems if you feed them lots and lots of data.”

Turaga’s research, which has numerous applications in the defense industry, is supported by the Army Research Office, an organization within the U.S. Army Combat Capabilities Development Command’s Army Research Laboratory.

But at the base of many of machine learning’s applications, no one knows how much data is actually needed to obtain accurate results. So, when the model doesn’t work, it’s difficult to understand why.

For simple apps like pet identification, it is relatively inconsequential if the program is wrong. However, more critical applications of machine learning, such as those found in self-driving cars, can have more detrimental impacts if they operate incorrectly.

“Many times, you do not actually have the ability to create such large datasets, and that’s why many of these autonomous systems are being held back from mission-critical deployment,” Turaga said. “If something is mission critical, on which people’s lives depend and on which other large investments depend, there is skepticism on how these things will pan out when we don’t really know the breakdown.”

Relying solely on data, a self-driving car might incorrectly identify a person on a street corner as stationary when the person is actually planning on crossing the street, or a home security sensor could completely miss detecting an intruder or incorrectly identify certain events.

Improving machine learning by studying geometry and topology

In the real world, there are countless variables that can produce endless outcomes, and it would take an ever-increasing amount of data to account for every circumstance that could potentially occur. Even for identifying objects, machines would need an innumerable amount of data to be able to recognize any given object in any orientation, and in any lighting condition.

But why reinvent the wheel when we can supplement machine learning with basic principles that have already proven?

Much like students in the Ira A. Fulton Schools of Engineering at ASU, machines have to learn the proven laws of the physical world.

“It seems like machine learning is creating its own separate representations of the world, which may or may not be rooted in basic things, like Newton’s laws of motion, for instance,” said Turaga, who leads the Geometric Media Lab at ASU. “So, there is a big disparity between what is known and what machine learning is attempting to do. We are at an intersection of trying to fuse these two to create more robust models, which can work even if we don’t have access to extremely large datasets.”

Turaga’s research involves applying two principles, Riemannian geometry and topology, to provide constraints to machine learning and reduce the size of the dataset needed to “teach” machines.

Riemannian geometry essentially is the study of smoothly curved shapes. It can be used to help mathematically describe ordinary objects using physics-based constraints.

Topology is the study of an object’s geometric properties that remain unchanged through the object’s deformation. For example, if you open and then close a pair of scissors, the shape of the scissors looks extremely different, but the object itself and most of its properties do not change.

In his research, Turaga has looked at multiple objects and modeled them using equations. In doing so, he has found that concepts from Riemannian geometry and topology appear to keep repeating themselves again and again.

“It turns out that if you know a little bit more about the kind of object you’re looking at, such as whether it’s convex-shaped, that provides a nice mathematical constraint,” Turaga said.

Getting back to object basics can improve three areas of machine learning

Not every physical principle can be efficiently accounted for, but by applying these new methods, Turaga hopes to see three main improvements to machine learning accomplished by his research: better interpretability, more versatility and higher generalizability.

“If we succeed in this research endeavor, one thing we’ll see is machine learning models becoming a little more interpretable — meaning when they work, or even when they fail, we will be able to explain the phenomenon in a way that relates to known knowledge about the world, which at this point does not exist,” Turaga said.

With better interpretability, machine learning errors can be better understood, corrected and improved, speeding up the overall rate at which autonomous systems can be developed and implemented.

Furthermore, Turaga’s research is expected to make machine models more adaptable.

For example, if a machine is first tested in a lab and then deployed on a ship, it will be subject to extremely different conditions than it was in the laboratory setting. Instead of trying to retrain the machine from scratch, Turaga hopes to be able to provide the machine with information based on known principles of the new environment and fine-tune its performance using small datasets during deployment.

Finally, Turaga expects machine learning to become faster and more efficient. He says training machine learning models is currently a huge energy sink, consuming electricity at a rate comparable to what is needed to supply power to a small country.

Increasing the efficiency of machine learning will also have environmental benefits.

On its current track, machine learning could “undo all the good things we are doing to make our world more energy efficient,” Turaga said. “But I believe if we are successful in training machine models faster by using knowledge from physics and mathematics, then the whole field can reduce its carbon footprint.”

More Science and technology

Lucy's lasting legacy: Donald Johanson reflects on the discovery of a lifetime

Fifty years ago, in the dusty hills of Hadar, Ethiopia, a young paleoanthropologist, Donald Johanson, discovered what would…

ASU and Deca Technologies selected to lead $100M SHIELD USA project to strengthen U.S. semiconductor packaging capabilities

The National Institute of Standards and Technology — part of the U.S. Department of Commerce — announced today that it plans to…

From food crops to cancer clinics: Lessons in extermination resistance

Just as crop-devouring insects evolve to resist pesticides, cancer cells can increase their lethality by developing resistance to…