When Troy McDaniel was a senior at Arizona State University, he thought he had the next chapter of his life planned out. After completing his bachelor’s degree in computer science, he would enter the workforce and find a job in the programming industry. But a few months before graduation, McDaniel started an independent study at ASU’s Center for Cognitive Ubiquitous Computing (CUbiC), and his whole plan changed.

The center focuses on machine learning and pattern recognition, human-computer interaction and haptics (a science concerned with the sense of touch), with applications for assistive and rehabilitative technologies.

“The idea of developing something that could go toward helping someone with an impairment was really motivating to me,” McDaniel said.

Undergraduate and even high school students are welcome in the lab, where they are paired up with graduate student researchers based on their skills and interests.

Simulating surgery

As an undergraduate, McDaniel wanted to explore the world of computer simulations and multimedia information systems. He took a class co-taught by Sethuraman “Panch” Panchanathan, the founding director of CUbiC, and was inspired by a lecture to join the lab. Through his independent study, McDaniel had the opportunity to apply his research interests and help surgical residents at a local hospital.

Specifically, the residents were training for laparoscopic surgery. This type of procedure, also called bandaid or keyhole surgery, is minimally invasive for the patient but can be challenging for surgeons.

“They have to hone some very precise skills,” McDaniel said. “Instead of opening the abdomen up, you make small incisions, stick the tools in, get the work done and get out of there.”

Because the incision is so small, surgeons must develop precision fine motor skills. They practice by using the surgical tools to perform certain tasks, like placing a ring on a peg.

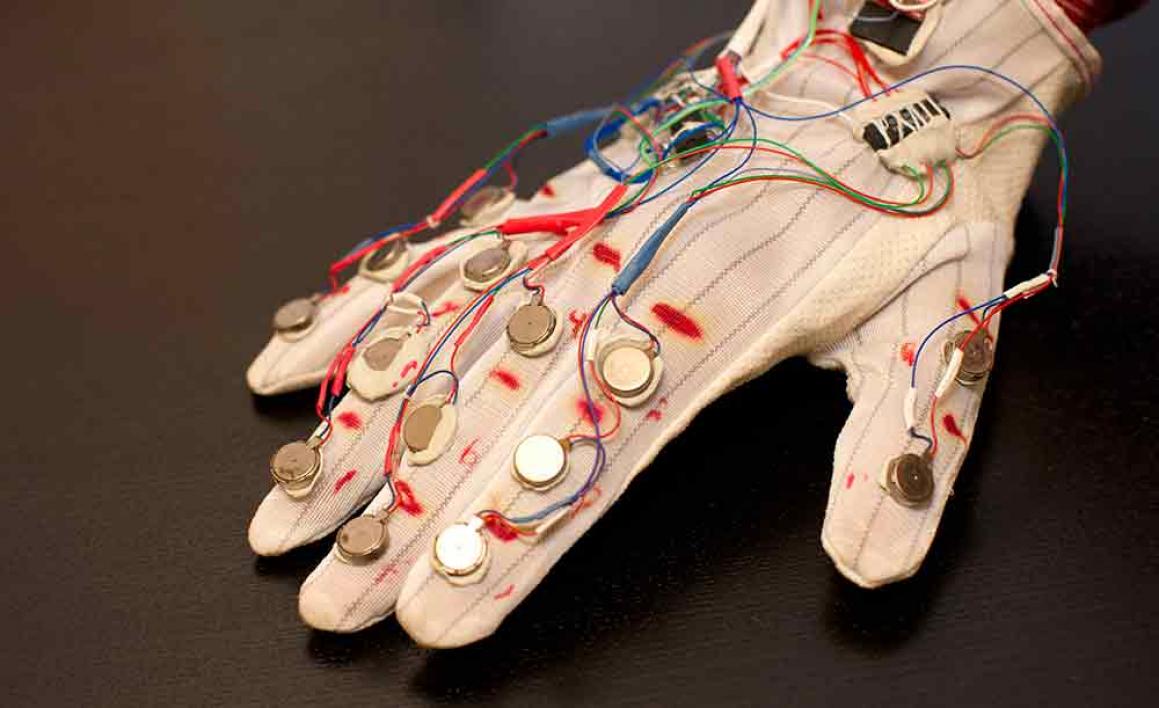

McDaniel and his mentors at CUbiC saw an opportunity to improve the training procedures. The team connected the laparoscopic surgery tools to a device that could provide haptic, or touch-based, feedback. This mimicked what the residents would feel during an actual surgery. McDaniel developed a simulated graphical model of surgeons’ hand movements, driven by “cybergloves,” which are wearable gloves embedded with sensors for detecting joint angles in the fingers.

“We were able to capture how many mistakes they made, how much time it took, and provide some real-time feedback,” McDaniel said.

After receiving his bachelor’s degree, McDaniel continued his studies and ultimately received his doctorate from ASU with Panchanathan as his adviser. He secured a postdoctoral fellowship that turned into an assistant research professorship and led to his current role as associate director of CUbiC. Now, McDaniel mentors other students so they can experience and benefit from research in the lab.

‘Seeing’ through touch

One of these students was Shantanu Bala. As a high school student, Bala was interested in assistive and rehabilitative technologies. He volunteered at CUbiC for two years to gain experience and learn more about the field. After graduating from high school, he enrolled at ASU with a double major in psychology and computer science and rejoined CUbiC as a student researcher. He began exploring ways to help visually impaired people have more enriching social interactions.

Sighted people take for granted a multitude of visual cues that enhance interpersonal communication. These include the location of others, which way they are facing, body language, facial expressions and hand gestures. Bala wanted to develop technology that could convey facial expressions.

“We were looking for some way of communicating information that wouldn’t interrupt the conversation,” Bala said. “You can’t really wear headphones or have a speaker or something like that, so we were left with the sense of touch and figuring out how to communicate things entirely through touch.”

The CUbiC team developed and tested two devices to address this challenge. One is a chair connected to a camera. When a visually impaired person sits in the chair, the camera can record another person’s facial expression and send that information, based on a predefined visual-tactile mapping, to vibrotactile motors on the chair. The motors cause the chair to vibrate in different formations to indicate a smile, frown or other facial expression, helping the user better gauge the interaction.

Another device Bala worked on is a variation of the cyberglove. Instead of mimicking the sensations of surgery, Bala’s glove provides haptic feedback in the shape of a facial expression on the back of a user’s hand.

Bala worked at CUbiC for a total of six years — two during high school and four as an ASU undergraduate. The semester before he graduated with his bachelor’s degree, Bala was offered a Thiel Fellowship. These competitive awards are marketed as an incentive for talented young entrepreneurs to drop out of school and pursue their ventures full-time.

Bala, however, was able to finish his studies and start the fellowship after graduating instead. Now, he’s continuing the assistive technology work he started at CUbiC, this time with a wearable wristband he hopes to patent and bring to market.

The device is similar to a smartwatch, except it doesn’t have a screen. Instead, it communicates via sensations that move across the skin. For example, if the user is following directions and needs to make a left turn, the wristband can provide those cues entirely through the haptic feedback.

Like McDaniel, Bala was inspired to pursue research at CUbiC because he wanted to help people.

“I don’t think I would be as engaged with the research if I felt that the end product wouldn’t be contributing to someone’s life in a very tangible way,” Bala said. “It makes me feel much better about the work, and I really enjoyed working with people at CUbiC because everybody has that shared goal or shared enthusiasm for building projects like that.”

Do-it-yourself solutions

Other students at CUbiC have been motivated by a problem they were facing firsthand. That was the case for ASU student David Hayden, a double major in computer science and mathematics. Hayden is legally blind, and his impaired vision was hindering his ability to keep up in his upper-division math classes. He had an optics piece that made the board easier to see, but he would lose a lot of time between looking up and reading the board, then looking back down to write his notes.

“I was struggling with the assistive technologies that already existed, and at some point I realized they just weren’t solving my problems,” Hayden said.

Hayden approached Panchanathan to find out if the CUbiC team had a solution, but Panchanathan had a different idea.

“I said, ‘You’re a very bright student. Why don’t you come and work in my lab with the other students to see how we can solve this problem? Who better understands this problem than you?’” Panchanathan recalled.

Hayden rose to the challenge. Working with a team of mechanical engineers, electrical engineers and industrial designers, he conceived an idea and secured funding from the National Science Foundation to help bring it to fruition.

He developed a device that combines a custom-built camera and a tablet computer. The camera can zoom and tilt as needed to capture a classroom lecture. It uploads video footage to the tablet, which has a split-screen interface. That way, the user can watch the video on one half of the screen and make typed or handwritten notes on the other half simultaneously.

Hayden called the device NoteTaker. After he graduated from ASU, his team formed a company to make the invention widely available. Today, about 50 people ranging in age from 7 to 55 are using NoteTaker. Hayden’s team also won first place in the Software Design category of the Microsoft Imagine Cup U.S. Finals, and then went on to take second place in the same category of the Imagine Cup World Finals.

Hayden is now a graduate student at the Massachusetts Institute of Technology, working on computer vision and machine learning projects. He wants to use technology to improve social interactions for people at all levels of the ability spectrum.

“I imagine wearable computers that stay out of our way, that don’t interrupt us when we’re engaging with others, but at small points in time can give us little bits of useful information just in the moment,” Hayden said. For him, disability has been a personal “call to arms” to create solutions. Hayden credits CUbiC for providing the tools he needed to solve his own challenges.

“CUbiC was really a nurturing space for me to build a worldview that enabled me and continues to enable me to work on technological solutions to everyday problems, whether they’re for people who are disabled or not.”

More Science and technology

Indigenous geneticists build unprecedented research community at ASU

When Krystal Tsosie (Diné) was an undergraduate at Arizona State University, there were no Indigenous faculty she could look to…

Pioneering professor of cultural evolution pens essays for leading academic journals

When Robert Boyd wrote his 1985 book “Culture and the Evolutionary Process,” cultural evolution was not considered a true…

Lucy's lasting legacy: Donald Johanson reflects on the discovery of a lifetime

Fifty years ago, in the dusty hills of Hadar, Ethiopia, a young paleoanthropologist, Donald Johanson, discovered what would…