Mind reading in the lab: New ASU professor decodes brain activity to understand memory and attention

Arizona State University’s Gi-Yeul Bae “reads minds” by decoding the brain’s electrical activity. Photo by Robert Ewing

The X-Men’s Professor Charles Xavier uses Cerebro to read minds. Arizona State University’s Gi-Yeul Bae “reads minds” by decoding the brain’s electrical activity.

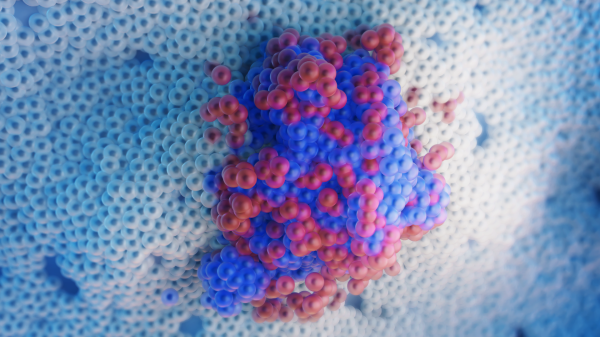

Neurons in the brain communicate with each other using electrical activity, and Bae records this activity using a technique called electroencephalography, or EEG for short. He then feeds the EEG data into a computer algorithm that learns the relationships among patterns of brain activity and the information encoded in the brain. This algorithm helps Bae better understand memory and attention.

“We can decode what people saw through the spatial patterns of brain activity, and we can use this information to answer challenging questions in psychology, like whether paying attention to an item is the same thing as holding it in your memory,” said Bae, who recently joined the faculty in the ASU Department of Psychology as an assistant professor. “It is very difficult to decode abstract thoughts, but we can figure out visual features like the tilt angle of an object.”

People who participate in an EEG experiment wear a tightly fitting cap that positions an array of electrodes across the scalp. These electrodes measure the brain’s electrical activity. To decode how the electrical signals are related to brain functions, Bae and his collaborators divide the EEG dataset into several parts. One part of the dataset is used to train the decoding algorithm. In this step, the algorithm finds associations between patterns of brain activity and events like remembering how an object is positioned in space. The remaining parts of the EEG dataset are then used to test the associations found by the algorithm.

“Thanks to the high temporal resolution of EEG — we can take a measurement every 4 milliseconds, which is 25 times faster than an eye blink — we can track the temporal dynamics of brain activity and see how information used in forming memories evolves over time,” Bae said.

Bae and his collaborators recently tested whether they could separate brain activity related to remembering an object’s location or its appearance. Each trial in the experiment showed a tear-shaped droplet that was rotated at a random angle. The droplet briefly appeared on a computer screen, and after it disappeared the participants had to remember the exact tilt angle of the droplet. Following a short delay, they used a mouse to report the droplet angle.

In the decoding experiments, the research team focused on two types of EEG signals called event related potentials (ERPs) and alpha waves. ERPs are the average electrical response to a stimulus like seeing a tilted droplet. Alpha waves are EEG signals that oscillate eight to12 times a second.

During the delay period, when the droplet was no longer visible and the participants were remembering its location and tilt, the decoding algorithm accurately predicted both the location and tilt angle from the pattern of ERPs on the scalp. This was not the case for alpha waves though: This EEG signal only tracked where the droplet was located.

Bae and his team also used the decoding algorithm to test how the recent past affects what people are currently holding in their memory.

How the participants reported the droplet orientation was biased by the orientation of the droplet they had just seen on the previous trial, even though the previous and current droplet tilt angles were unrelated. When the team used the decoding algorithm to look at a trial, they found patterns of brain activity that encoded the tilt angle from the immediately preceding trial.

“The information for the previous trial was still in the brain during the current trial. This decoding result suggests that the past memories get reactivated in the brain when it processes some similar information,” Bae said. “For example, your brain may reactivate your memory of a snake when you see a rope.”

Bae is currently applying the decoding method to the study of mental illness. One of his projects uses EEG decoding to understand how working memory — the process of temporarily keeping information available — differs between people with schizophrenia and their healthy peers.

More Science and technology

ASU and Deca Technologies selected to lead $100M SHIELD USA project to strengthen U.S. semiconductor packaging capabilities

The National Institute of Standards and Technology — part of the U.S. Department of Commerce — announced today that it plans to…

From food crops to cancer clinics: Lessons in extermination resistance

Just as crop-devouring insects evolve to resist pesticides, cancer cells can increase their lethality by developing resistance to…

ASU professor wins NIH Director’s New Innovator Award for research linking gene function to brain structure

Life experiences alter us in many ways, including how we act and our mental and physical health. What we go through can even…