Gene-edited babies. In-home speakers that never stop recording. Social networks selling companies your personal … well, everything.

These are just some of the latest examples of how humans are pushing the boundaries of innovation in pursuit of scientific advancement and profit. They raise questions about the role of responsible innovation and how best to balance progress and corporate ambition with ethical behavior.

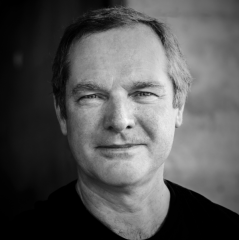

In his most recent book, “Films from the Future: The Technology and Morality of Sci-Fi Movies,” Andrew Maynard, professor in Arizona State University's School for the Future of Innovation in Society, looks at these questions through the lens of film. He shares with ASU Now why responsible innovation is needed now more than ever, and what movies can teach us about our relationship to technology and to each other.

Question: What is responsible innovation?

Andrew Maynard

Answer: If we want to see the full benefits of emerging science and technology, it’s something of a no-brainer that we learn how to innovate in ways that anticipate their potential downsides, and how to avoid them. This is at the heart of what is increasingly referred to as “responsible innovation.”

When it’s stripped of the academic jargon that sometimes accompanies it, responsible innovation is about helping ensure science and technology serve as many people as possible, both now and in the future; especially in communities that are particularly vulnerable to bearing the brunt of naïve and poorly conceived ideas.

This seems obvious. But many innovators still lack the insights, the training or the tools to translate their good intentions into responsible actions.

Q: How have recent events (such as Facebook’s ongoing security issues or the birth of genetically modified babies) highlighted the need for responsible technological and scientific innovation?

A: 2018 has been something of a turning point for recognizing that powerful technologies come with great responsibility. Things have gotten so bad that New York Times contributor Kara Swisher recently asked, “Who will teach Silicon Valley to be ethical?”

In the past few weeks, the scientific community was shocked by the announcement of seemingly irresponsible embryonic gene editing in China. It’s a development that raises the specter of designer babies that will pass their engineered traits on to their own children, but with little understanding of what might go wrong.

This past year we’ve also seen growing concerns around the potential dangers of embracing artificial intelligence without thinking deeply about the consequences. In March, we saw the first pedestrian death associated with a self-driving car. And, of course, it’s hard to avoid the ethical minefield around how companies like Facebook are using and abusing personal data.

In many of these cases, scientists and innovators have good intentions. But as we’re fast learning, good intentions are meaningless without a broader understanding of what it means to innovate responsibly, and how to do this effectively.

Q: Your book, “Films from the Future: The Technology and Morality of Sci-Fi Movies,” explores trends in technology and innovation, and the social challenges they present, through the lens of the movies we watch. What can movies teach us about our relationship with technology and with each other?

A: Science fiction movies are notoriously bad when it comes to getting the “science” bit right. But because they are almost universally stories about people, they are remarkably good at revealing often-hidden insights into our relationship with science and technology. This makes them especially effective as a starting point for exploring what it means to develop and use powerful new capabilities responsibly.

For instance, “Jurassic Park” is a movie about dinosaurs that plays fast and loose with the realities of genetic engineering. But it’s also a movie that presciently reflects current-day issues like the irresponsible use of gene editing, the dangers of hubristic entrepreneurialism, and the fine line between “could” and “should” when it comes to emerging science and technology.

Similarly, the film “Minority Report” — another Steven Spielberg classic — shines a searing spotlight on present-day challenges around using AI to predict criminal intent, and discriminating against people on the basis of crimes they haven’t yet committed.

And the 1995 Japanese anime “Ghost in the Shell” provides a remarkably insightful perspective on what it means to be human in an age where we are increasingly augmenting our bodies with advanced technologies.

With the appropriate guide, these and many more movies can help us better understand the nuances and complexities of how real-world technologies affect our lives, and how to get them right.

Q: What future trend most encourages you or alarms you?

A: As a scientist and a bit of a geek, I must admit that I’m excited by a lot of what’s going on in technology innovation at the moment. At the same time, the professional worrier in me is extremely anxious about the lack of forethought in many of these areas, and how our naïve optimism is likely to come back and bite us if we don’t get our act together.

At the top of my “worry” list has to be how machine learning and other forms of AI are becoming more mainstream, without a clear sense of what the potential downsides are to systems that make decisions without us fully understanding how. This is particularly concerning where machines are being used to tag people as “good” or “bad” (whether for crime prevention or choosing a babysitter) without strong ethical guide rails.

It’s also alarming how many people are unquestioningly inviting technologies into their lives that monitor their behavior (and potentially pass the data on to others) through devices like smart speakers and other internet-connected devices.

Then there are longer-term concerns around machines that learn how to use our cognitive biases against us — I’m not sure that we’ll get Terminator-like AI that will try and destroy us, but I’m pretty sure machines will one day learn how to manipulate us if we’re not careful.

I also worry about the irresponsible use of technologies like gene editing, and our increasing ability to alter the “base code” of living organisms. Gene-editing techniques like CRISPR could radically transform lives for the better, but only if we learn how to handle the power they bring with great responsibility.

That said, I think we still have a window of opportunity to learn how to develop and use these and other emerging technologies responsibly, so we see the benefits without suffering the consequences of naïve innovation. But it will require quite an innovative shift from business as usual, and maybe more time watching science fiction movies!

Top photo courtesy of pixabay.com

More Science and technology

ASU and Deca Technologies selected to lead $100M SHIELD USA project to strengthen U.S. semiconductor packaging capabilities

The National Institute of Standards and Technology — part of the U.S. Department of Commerce — announced today that it plans to award as much as $100 million to Arizona State University and Deca…

From food crops to cancer clinics: Lessons in extermination resistance

Just as crop-devouring insects evolve to resist pesticides, cancer cells can increase their lethality by developing resistance to treatment. In fact, most deaths from cancer are caused by the…

ASU professor wins NIH Director’s New Innovator Award for research linking gene function to brain structure

Life experiences alter us in many ways, including how we act and our mental and physical health. What we go through can even change how our genes work, how the instructions coded into our DNA are…