To err is human, even for one of the most number-crunching, rigorous and truth-seeking of all activities: science. The issue of errors in science — irreproducible research — was a focal point for a national discussion when the Global Biological Standards Institute (GBSI) hosted its 2016 BioPolicy Summit last week in Washington, D.C.

GBSI President Leonard Freedman and co-author Tim Simcoe, associate professor at Boston University, documented that up to 50 percent of published, pre-clinical research is irreproducible, with an estimated annual cost of $28 billion in the U.S. alone.

“These are big and troubling numbers,” said Freedman. “Over the long term, science is self-correcting. In the short term, the effects of irreproducible research include the cost of time and resources … and a growing mistrust in the biomedical research enterprise.”

There is also the untold human cost of delays in breakthrough discoveries, therapies or potentially new cures from the faulty data, reagents that don’t work as advertised or experiments that can’t be validated.

The 2016 GBSI Summit — “Research Reproducibility: Innovative Solutions to Drive Quality” welcomed premiere life science thought leaders, including Arizona State University biomarker researcher Joshua LaBaer and science correspondent and moderator Richard Harris (currently on leave from National Public Radio as a visiting scholar this spring at ASU), to explore the driving forces and profound impacts behind the issues.

LaBaerLaBaer is also the Virginia G. Piper Chair of Personalized Medicine and a professor of chemistry and biochemistry in the College of Liberal Arts and Sciences., new interim director of ASU’s Biodesign Institute and national biomarker expert, outlined the issues when facing his own lab’s efforts to improve cancer survival rates through the power of early detection.

“One of our holy grails is a test for the early detection of cancer,” said LaBaer, an international leader in the area of proteomicsProteomics is the large-scale study of proteins, particularly their structures and functions. Proteins are vital parts of living organisms, as they are the main components of the physiological metabolic pathways of cells. — Wikipedia and an early enthusiastic proponent of big-data, open-science solutions.

“Despite tens of thousands of biomarker papers published, only about a half-dozen biomarkers have been FDA [Food and Drug Administration] approved,” he said. “That’s just a huge discrepancy in the number of papers published, and those that stood the test of time.”

Arizona State University biomarker researcher Joshua LaBaer (second from left) is partnering with the Global Biological Standards Institute to establish a pilot project creating a new gold standard and comprehensive registry of how each repository managed its sample collections.

To upend the scientific status quo and improve biomarker discovery and validation, LaBaer has led a massive effort to build fully sequence-verified clone sets for all human genes and other model organisms. The giant gene bank, called DNASU, is now managed in an automated repository with more than 250,000 samples, which are openly shared in more than 35 countries to benefit the worldwide scientific community.

Despite his pioneering efforts and countless other colleagues working hard to improve scientific reproducibility through cutting-edge, open-source solutions, the scale of the issue has required a system-wide re-examination of the current science climate.

“The problem of fixing reproducibility, $28 billion, is an amount that represents almost the entire annual NIH [National Institutes of Health] budget,” said keynote speaker Judith Kimble, Ph.D. at the University of Wisconsin–Madison and Howard Hughes Medical Institute investigator. Kimble emphasized, “The elephant in the room is hyper-competition.”

Never before has the U.S. produced so many Ph.D.s, nearly 80,000 annually, to compete for so few federal dollars (a threefold increase in the number of Ph.D.s compared with a generation ago, with a twofold increase in the number of NIH grant applications). And this is even after leaders in Washington have just voted to give the NIH a huge increase in funds ($2 billion). But in terms of constant dollars, the amount is still down about 30 percent since before the recession, leaving the new crop of researchers to fend for less, noted Kimble.

With a flat funding environment, there is a tendency for scientists to propose what they know will work, instead of riskier research, the type that often leads to truly groundbreaking discoveries.

“Competition arises only when fields exist,” said Arturo Casadevall, professor at the Johns Hopkins Bloomberg School of Public Health. “Whenever there is competition, competitors try to use existing methods to solve the problem. Therefore, competition tends to put an end to innovation. It’s the opposite of what people often think.”

Unintentionally, research competition has led to a “more cooks, worse soup” scenario for science, as more researchers than ever apply for shrinking federal funds, creating a lack of transparency in sharing data, and low morale, ultimately giving researchers pause to understand how the key ingredients went astray.

But as Richard Harris poignantly pointed out during the panel discussion, since securing federal awards and producing top scientific publications are based entirely on a peer-review system, “We have met the enemy, and it is us!”

When the stakes are so high to publish in the best journals, with entire research careers at stake against more and more competition, it’s no wonder that each research lab tends to act like a top chef, hoarding their secret sauces and recipes.

“What’s good for me as a practicing scientist is not necessarily good for science,” said Brian Nosek, professor at the University of Virginia and executive director of the Center for Open Science. “The current system rewards scientists for publishing often and getting tenure. Open data and transparency are the keys to improving reproducibility.”

“Over the long term, science is self-correcting. In the short term, the effects of irreproducible research include the cost of time and resources … and a growing mistrust in the biomedical research enterprise.”

— Leonard Freedman, president of the Global Biological Standards Institute

“There is need for all of us to get our assumptions out there for people to see, but we need better carrots and sticks,” said Amy Herr, professor, Department of Bioengineering at the University of California, Berkeley. She has witnessed the stress of competition as it has even trickled down to affect the attitudes heard from the next generation of scientists. “When students say only that they want to publish in a prestige journal instead making a new discovery, my heart just breaks.”

To solve the reproducibility issue, the GBSI announced at the BioPolicy Summit the Reproducibility2020 Challenge, to ensure that major solutions are in place to improve reproducibility by the year 2020.

The action plan of the challenge calls for the entire research community to improve in three critical areas: reagent standards and validation, shared protocols and data, and improved training.

“Why many of us are working so hard in this endeavor is that we start with an understanding that data is not accurate,” said Herr. “That's just how we work.”

It’s also a key reason why LaBaer has now been partnering with GBSI to establish a pilot project, called BioSpecimen Commons, to create a new gold standard and comprehensive registry of how each repository managed its sample collections.

“Translational research depends on clinical specimens,” LaBaer said. “Unfortunately, researchers are rarely told how those specimens were handled before they are used, which creates variables that can dramatically affect the research outcome. Without that critical information, you never know if you are comparing apples to apples or to some other fruit. We cannot currently dictate how samples are handled, and that may not even be advisable, but we can ask that everyone describe exactly what was done. The first step in all of this is transparency.”

For the BioSpecimen Commons, every sample will be labeled with ID number(s) that link it to the specific recipes used in its preparation (standard operating procedures, or SOPs). In this way, the handling of every sample will be publicly available. LaBaer envisions as more and more people submit SOPs, then other people can look at them and decide which one is best for them. There will be a comments section, similar to Amazon, where people can comment and let the community know which ones worked and why. Over time, different SOPs will grow in popularity as researchers comment on which one worked best for them.

“Now, we are getting the whole community involved in saying how they did it. And every bit of data needs to go up on the web. If we make it transparent, at least we can take a step forward, and potentially amplify it in any area of biomedical research where there are protocols to follow.”

If more researchers can adopt such big-data, validated open science, it will not only help shape tomorrow’s discoveries, but bring more value to the health and well-being of society.

To learn more, go to GBSI’s Reproducibility2020

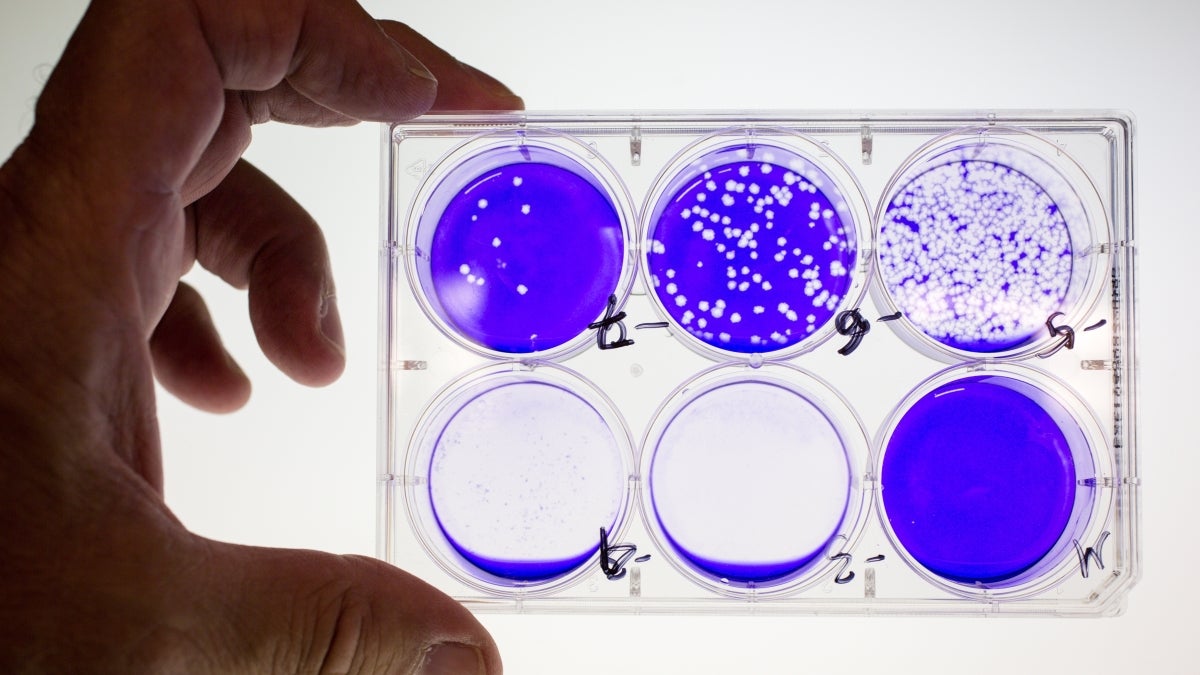

Top photo by Charlie Leight/ASU News

More Science and technology

Ancient sea creatures offer fresh insights into cancer

Sponges are among the oldest animals on Earth, dating back at least 600 million years. Comprising thousands of species, some with lifespans of up to 10,000 years, they are a biological enigma.…

When is a tomato more than a tomato? Crow guides class to a wider view of technology

How is a tomato a type of technology?Arizona State University President Michael Crow stood in front of a classroom full of students, holding up a tomato.“This object does not exist in nature,” he…

Student exploring how AI can assist people with vision loss

Partial vision loss can make life challenging for more than 6 million Americans. People with visual disabilities that can’t be remedied with glasses or contacts can sometimes struggle to safely…